Juju Deployments

at Canonical

Brad MarshallEmail: brad.marshall@canonical.com

Twitter: bradleymarshall

IRC: bradm@freenode

Who am I?

- Unix sysadmin with 20 years experience

- Currently sysadmin at Canonical

- Worked in environments from small software startups to university

- Volunteered for Debian, SAGE-AU, linux.conf.au, HUMBUG, CQLUG

- Presentations/articles at SAGE-AU, AUUG, CQLUG, HUMBUG, Linux.com

OpenStack

- OpenStack is the primary focus of all our new services

- Gradually moving services across as time permits

- Have been using OpenStack in production for about 3 years now

- Gone through multiple iterations of deployments over the years

- As software has improved, so has deployment methodology

Software Stack

- OpenStack

- Ubuntu

- MaaS

- Juju

- Landscape

- Mojo

How to deploy

- MaaS and Juju to deploy OpenStack to bare metal

- Juju to deploy to OpenStack

- Landscape to manage servers and VMs inside OpenStack

- Mojo to validate deployments

Juju

- Service orchestration for the cloud

- Can deploy to OpenStack, MaaS, AWS, HP Cloud, Azure, Joyent, Digital Ocean, local containers, Vagrant, manual

- Providers being added all the time

- Charms are pre-setup, configurable services

- Juju expresses relationships between charms - provides a lot of value

- Bundle is a set of services expressed as charms

- You can control where things are deployed via juju deploy --to instance

- Able to deploy to LXC containers, useful to run more than one service per physical host

Juju example

$ juju bootstrap

$ juju deploy juju-gui --to 0

$ juju deploy -n 3 --config ceph.yaml ceph --constraints "mem=2G"

$ juju deploy -n 3 --config ceph.yaml ceph-osd --constraints "mem=2G"

$ juju add-relation ceph ceph-osd

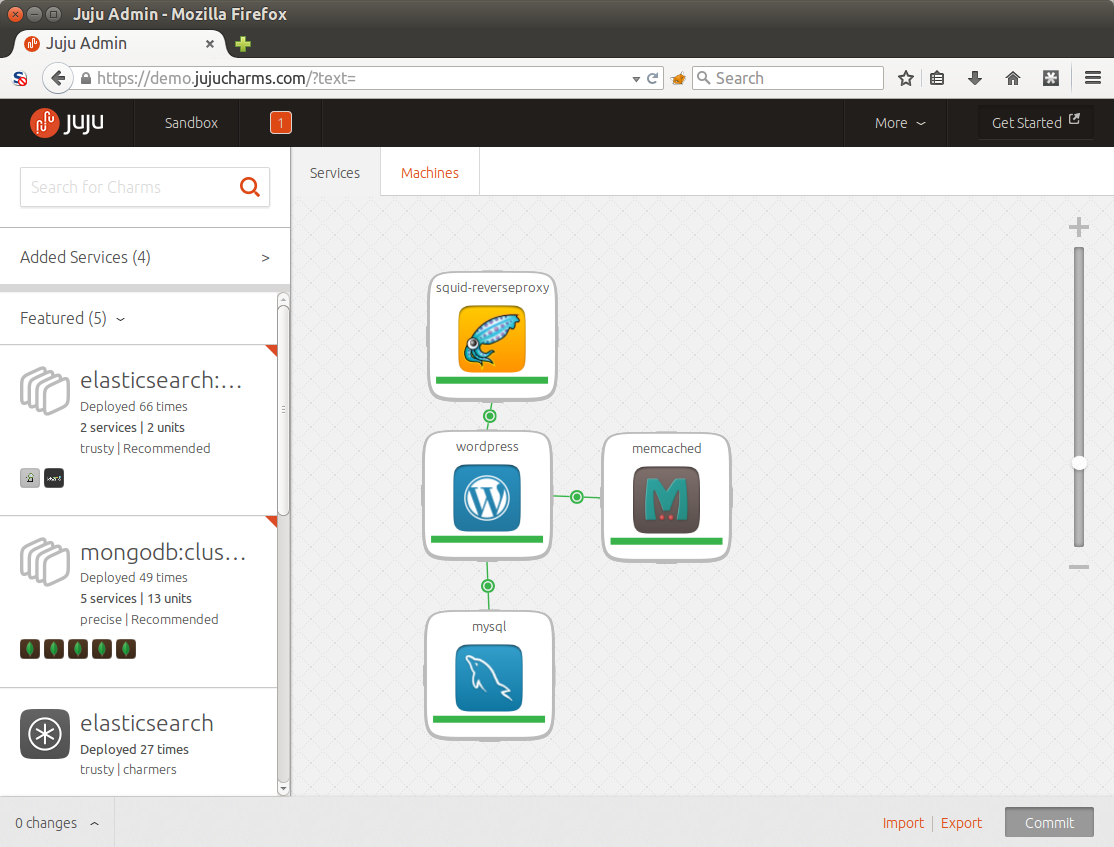

Juju Gui Services View

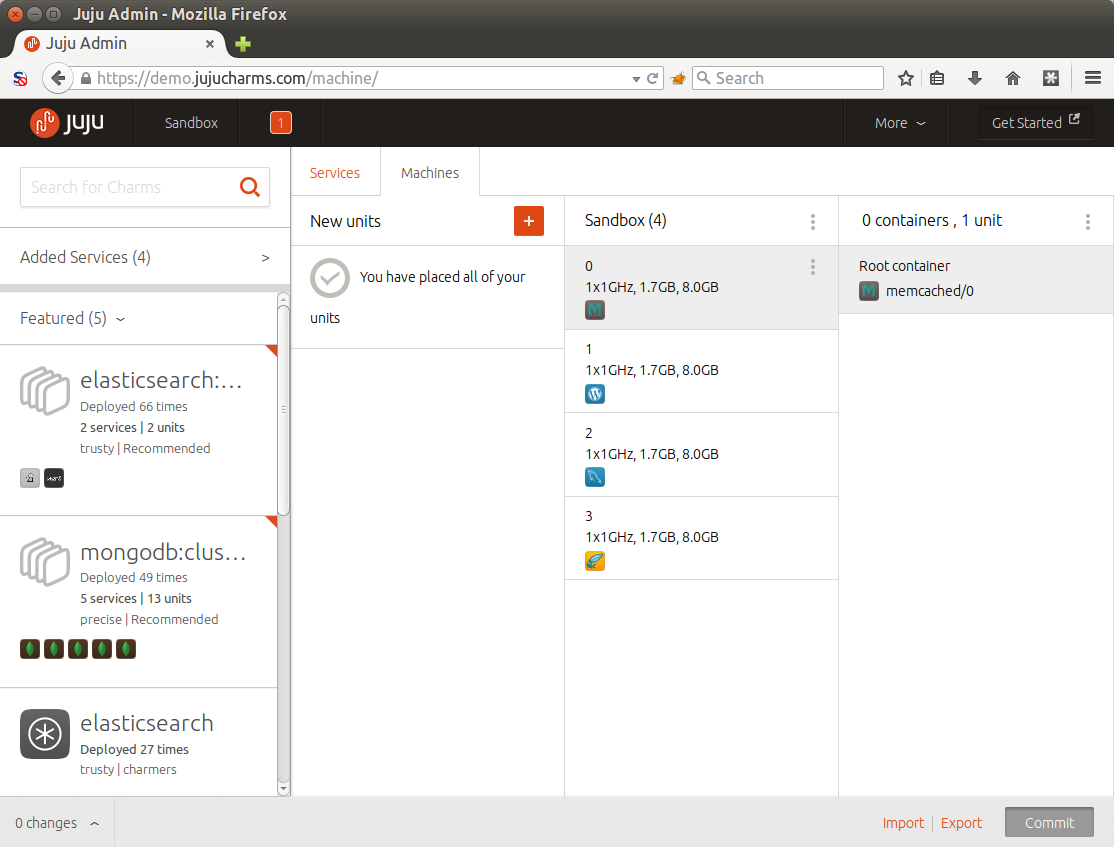

Juju Gui Machine View

Maas - Metal As A Service

- Controls deployment of physical hosts

- Lets you treat bare metal the same as a cloud guest

- Juju provider can use MaaS natively

- Can manage KVM guests as well

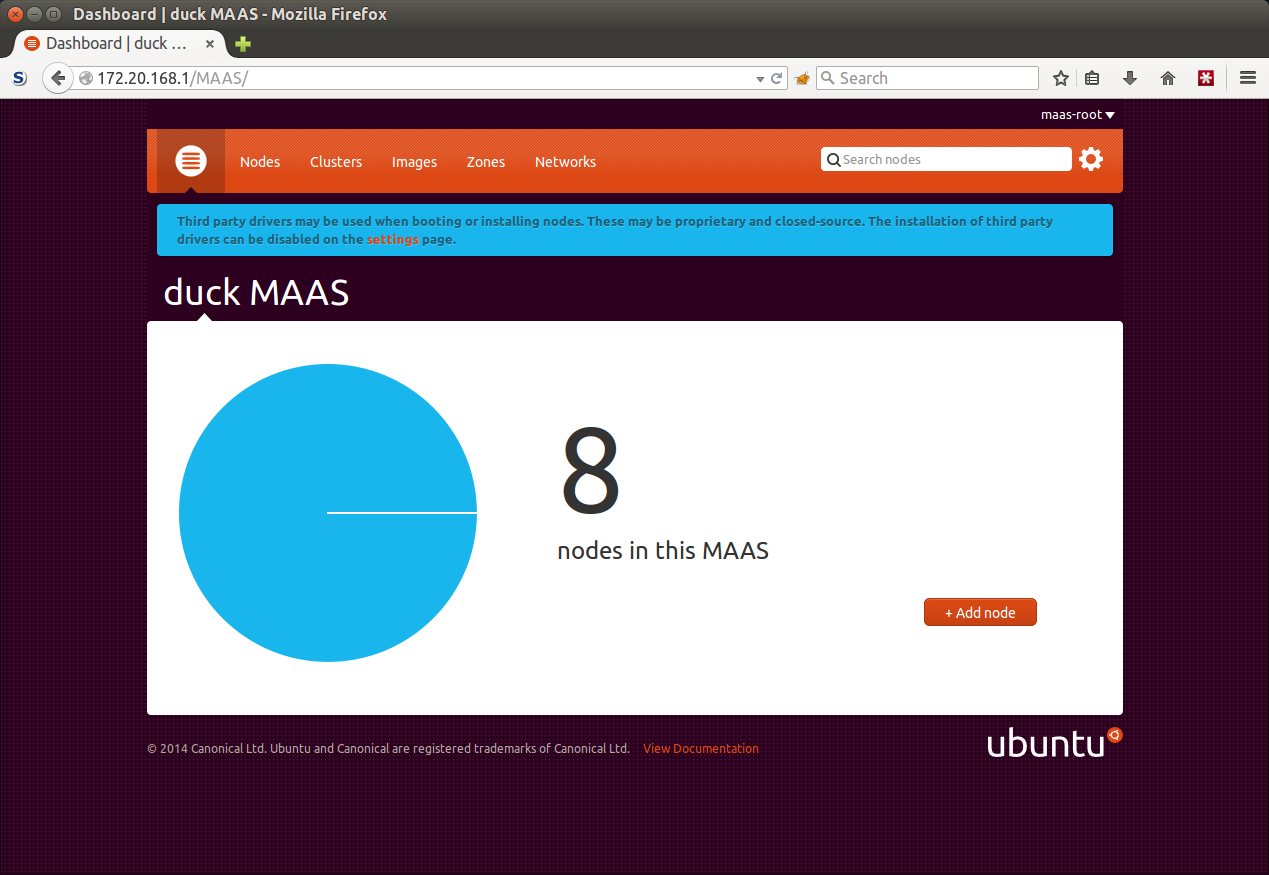

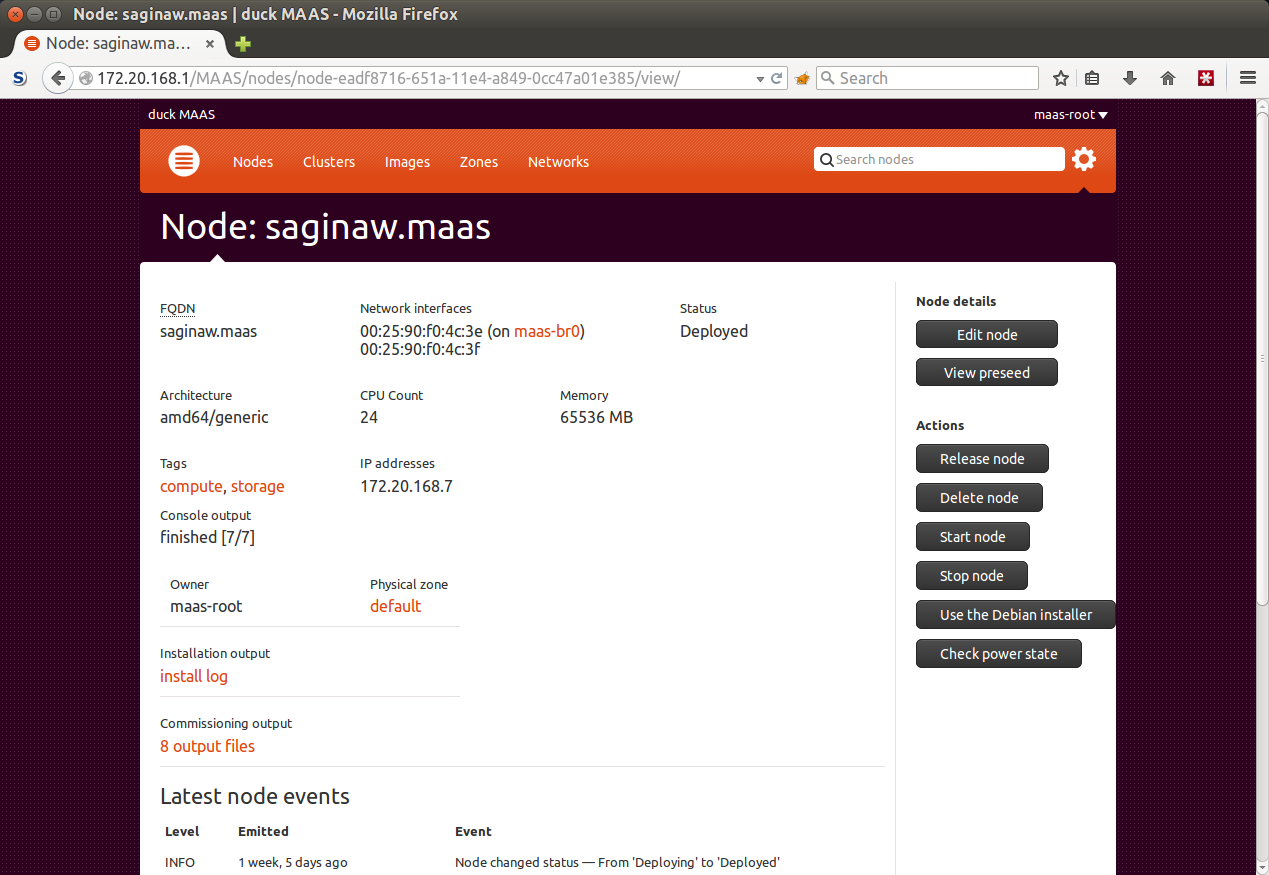

MaaS - WebUI

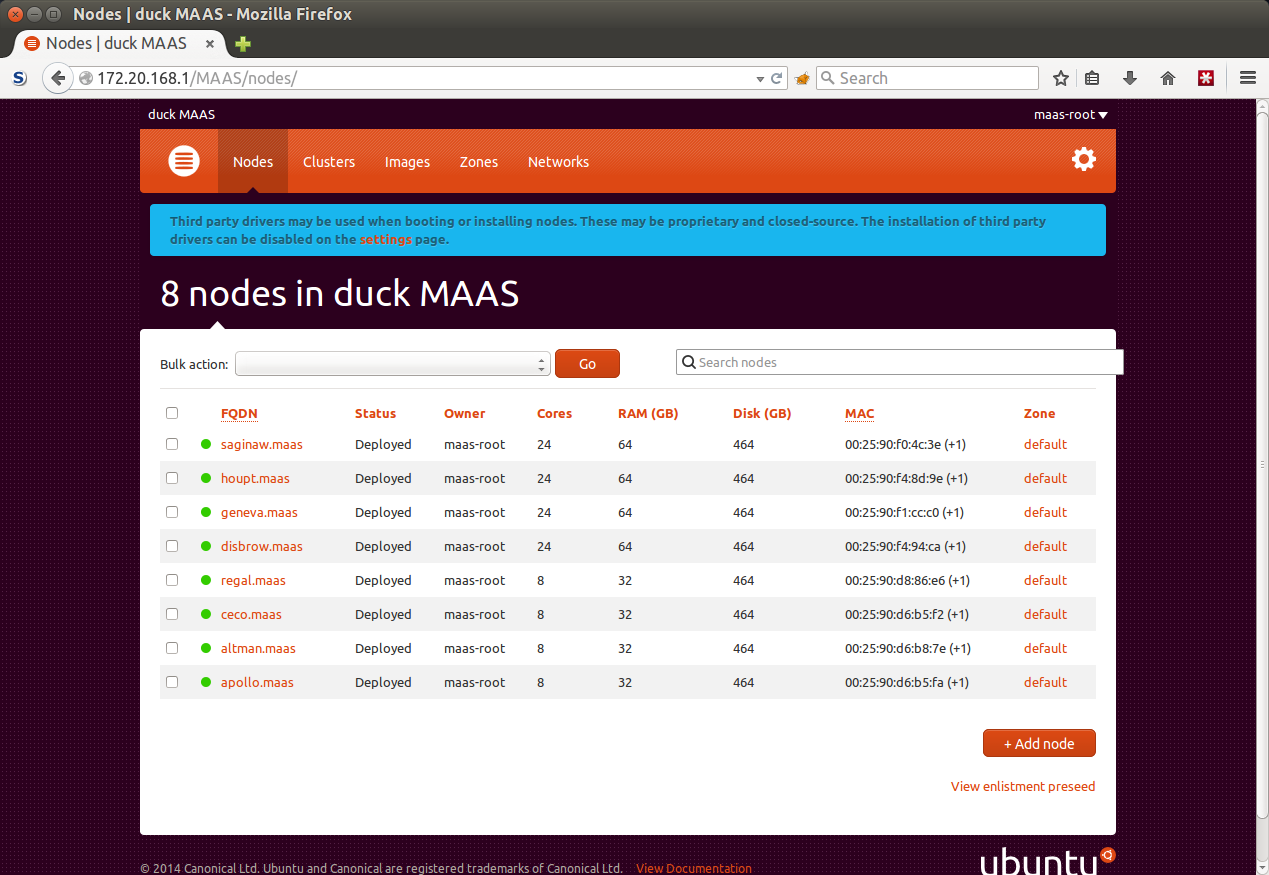

MaaS - Node list

MaaS - Example Node

Mojo - CI for Services

- Continuous integration and deployment for Juju-based services

- Can be used in conjunction with Jenkins for CI (deploy and test) of juju services

- Used to ensure services are always deployable "from scratch"

- Can test upgrades of charms, scaling out or anything else you can script

- Uses nagios checks defined for services as well as other arbitrary tests

- Using it for deployments in staging and production as well

- Still a work in progress, but already helping find issues

- Has been recently open sourced - see https://launchpad.net/mojo

Where Is OpenStack Used

- Canonistack - dev / testing stack

- Prodstack - production stack

- Stagingstack - staging environments on prodstack

- ScalingStack - stack for scaling type environments - currently used for LP PPA building and error tracking

- OpenStack Integration Lab - testing integration of hardware and software

- BootStack - managed cloud for clients

What's Canonistack?

- OpenStack installation anyone in the company can use

- 2 regions, running two different releases

- Right now we have Grizzly and Havana

- Work ongoing on a 3rd region using Trusty/Juno and VMware as the compute and storage driver

- Allows oversubscribing to get the most usage

- Allows dev to test across multiple releases and prototype things

- Downtime can happen, but improving over time

- Want developers to prefer this over envs, like AWS

- Good learning environment

Prodstack 4.0

- Latest version of Prodstack

- Stagingstack is the staging version of Prodstack

- Currently running internally developed services

- Icehouse on Trusty (14.04) using Ubuntu Cloud Archive

- Migrating services from legacy Prodstack3 across

- Once services migrated from Prodstack3 will integrate hardware

- Much more reliable version of Ceph, and using Neutron

Prodstack 3.0

- Production deployment of OpenStack

- Running Precise (12.04) with Folsom

- Legacy version of Prodstack

- Using Ubuntu Cloud Archive which backports versions of OpenStack to LTS

- Some issues with early versions of software, ie running Ceph Argonaut

What runs in Prodstack

- Ubuntu SSO

- Click Package services which underpins the Ubuntu Phone

- Certification website

- Product search - Amazon search on desktop

- Software center agent

- Parts of ubuntu.com website

- Juju charms website

- Juju GUI website

- errors.ubuntu.com, retracers are migrating to ScalingStack

- Various private git and gerrit services

- Ubuntu Developer API docs

- Many others

BootStack

- Managed cloud deployed for clients, either on location or via commercial hosting providers

- Uses Ubuntu 14.04 and Icehouse

- HA OpenStack using Juju and LXC

- Uses Landscape, Nagios, Logstash for ops side of thing

- Base deployment takes about 45 minutes, another 10 minutes for nagios/landscape

- Uses LXC containers for base OpenStack charms in HA

- Nova-compute, swift and ceph are co-located on same node

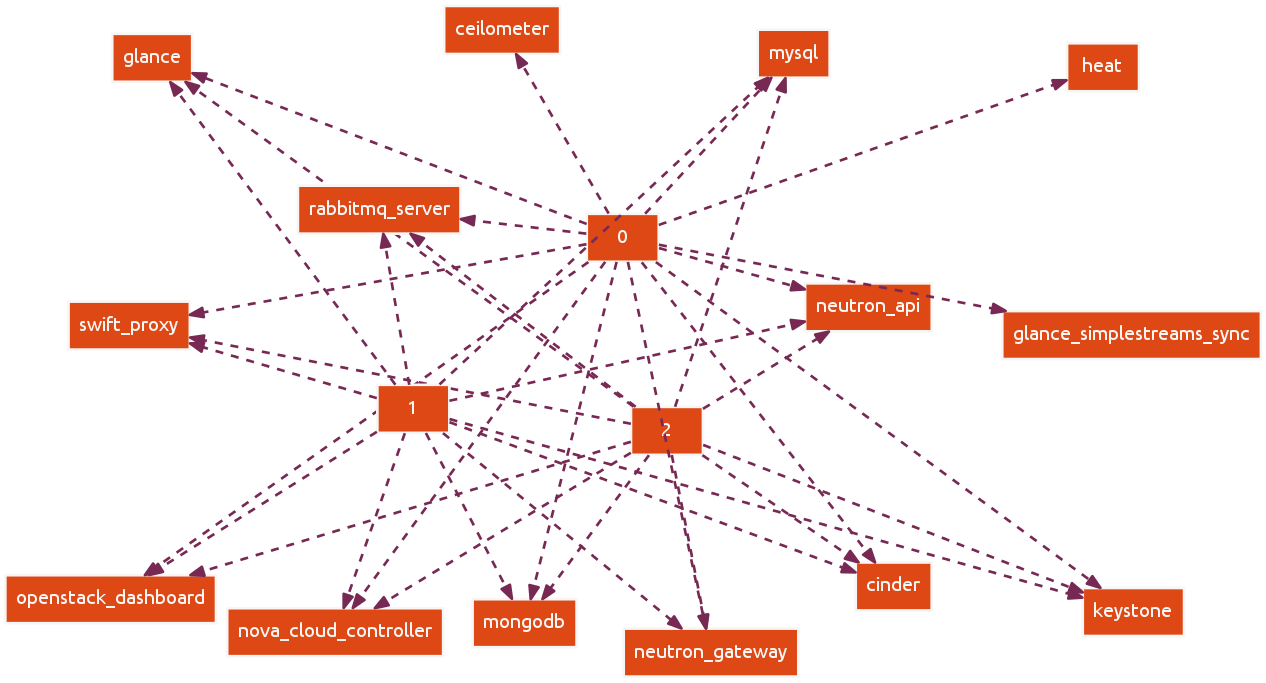

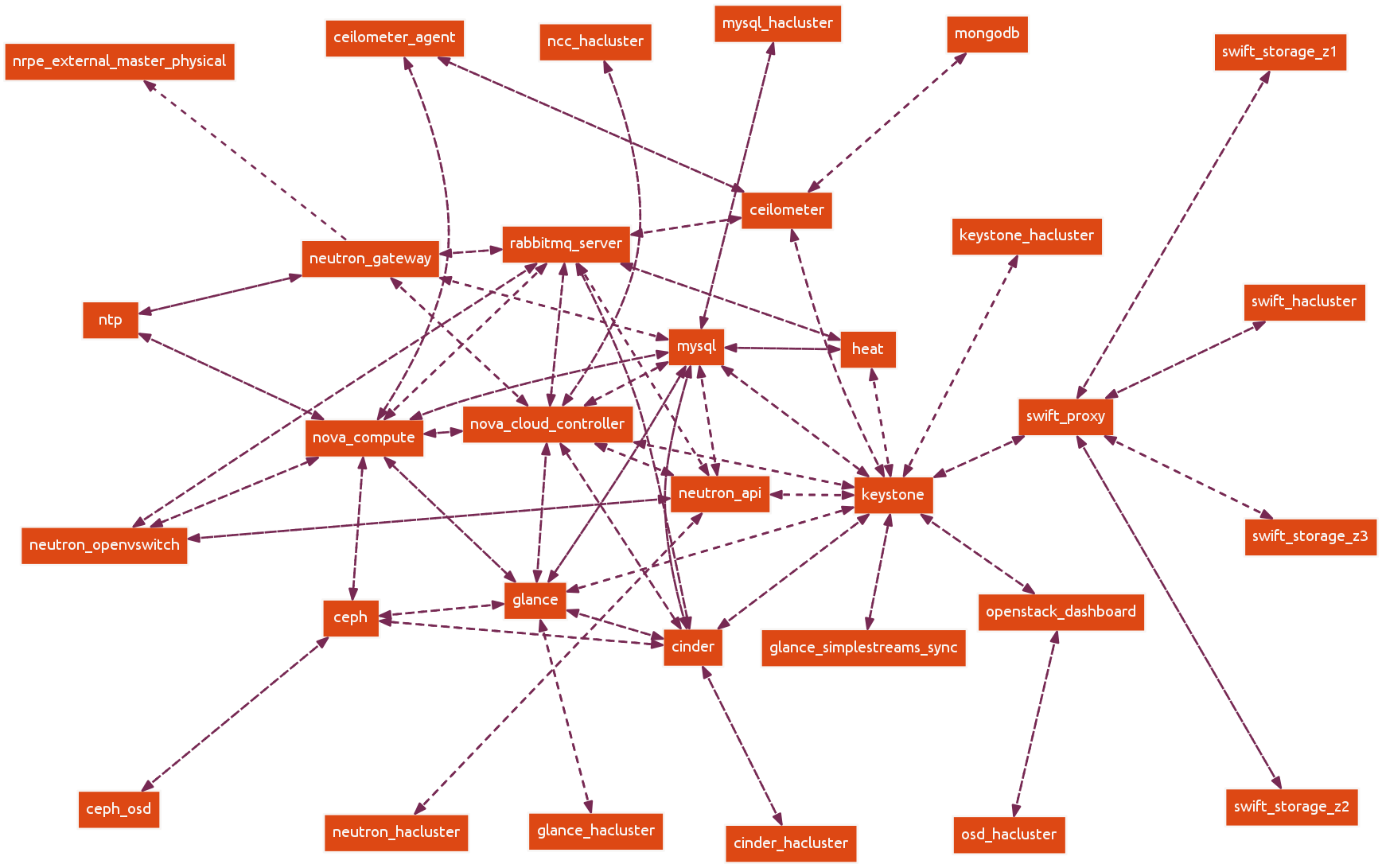

HA OpenStack via Juju charms

- Uses ubuntu charm as placeholder to allow LXC deployments

- Uses HA juju bootstrap nodes, requires at least 3 instances

- Using juju bootstrap nodes as containers for HA OpenStack

- Some parts aren't HA yet, ie ceilometer, heat

- Stateless services charms use Corosync/Pacemaker for HA

- Mysql uses Percona for active/active clustering

- RabbitMQ and MongoDB use native clustering

- Charms use HAProxy on each node, pointing to all units in the cluster

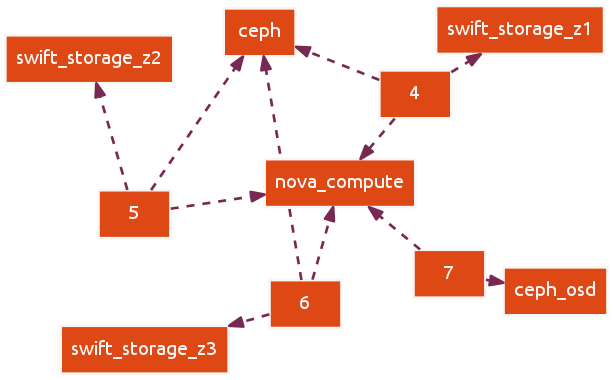

Compute and Storage

- No HA for nova-compute, but setup for live migration

- Ceph is distributed over at least 3 hosts, 3 mon and any extras as OSD

- Ceph is currently only using one OSD per disk per host

- Swift across at least 3 hosts, each as a separate zone

- In staging nova-compute, ceph and swift are on same hosts

BootStack HA architecture

BootStack Storage and Compute

BootStack Relations

Deployment History

| Stack | Deployment type | Version |

| Canonistack lcy01 | Manual | Folsom (2012.2) upgraded to Grizzly (2013.1) on Ubuntu 12.04 |

| Canonistack lcy02 | Manual with some Puppet | Havana (2013.2) on Ubuntu 12.04 |

| Prodstack | Juju + MaaS | Folsom (2012.2) on Ubuntu 12.04 |

| Prodstack 4.0 | Juju + MaaS with LXC | Icehouse (2014.1) on Ubuntu 14.04 |

| BootStack | Juju + MaaS with LXC and HA | Icehouse (2014.1) on Ubuntu 14.04 |

Workflow for apps

- Devs work up a Juju charm and how to deploy on Canonistack

- Webops (group who deal with devs) take charm and work it into a usable deployment story on stagingstack, going back and forth with devs as required

- Once everyone is happy the stack is deployed into prodstack, and into production by ops

- Updates to apps are done via devs, tested via mojo, rolled into stagingstack and then onto prodstack by ops

- This allows good involvement between ops and devs, while still keeping separation of responsibilities

- Starting a trial of getting devs to run some staging services using mojo, restricted to non-critical ones

Problems

- Learning curve for both devs and ops

- Early versions of software has bugs to deal with - improving over time

- Having to deal with migrations as things change - ie nova-network to neutron, juju moved from python to go

- Useability issues with software, ie using uuids for an identifier in CLI - improving over time

Advantages

- Vastly improved our density of services on servers, decreasing capex (buying less servers) and opex (powering less servers)

- Dynamic scale up and down of services - ie, we can add more website units at release time

- True reproducability of deployments

- Staging environment that closely matches prod without too much extra hardware required

- Both devs and ops develop an awareness of each others needs

- IS is involved in development side of things, help shape thing to work in production

- We are upstream for the OS - opportunity to get things in

- Dogfooding our product and providing feedback to devs

References

- Juju - https://juju.ubuntu.com/

- MaaS - http://maas.ubuntu.com/

- Mojo - https://launchpad.net/mojo

- Landscape - https://landscape.canonical.com/

- BootStack - http://www.ubuntu.com/cloud/bootstack

- OpenStack in Production by Robbie Williamson at ODS Portland, 2013

- ScalingStack: 2x performance in Launchpad's build farm

- OpenStack at Canonical by Brad Marshall at LCA2014, Perth

Questions?

Contact me via:Email: brad.marshall@canonical.com

Twitter: bradleymarshall

IRC: bradm on Freenode

Slides at http://quark.humbug.org.au/lca2015/